What is a TPU (Tensor Processing Unit)?

A TPU is also a type of ASIC (Application-Specific Integrated Circuit), meaning it is built for a specific function — in this case, AI tasks.

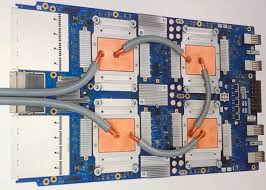

- First introduced by Google in 2015, TPUs are specially designed hardware units built from the ground up to handle machine learning operations.

- TPUs focus on processing tensors — the multidimensional data arrays used in AI model computations.

- They are optimised to run neural networks efficiently, enabling faster training and execution of AI models than GPUs or CPUs.

- For example, training an AI model that may take weeks on a GPU can often be completed in hours using a TPU.

- TPUs are used at the core of Google’s major AI services, such as Search, YouTube, and DeepMind’s large language models, illustrating their real-world application in high-scale AI infrastructure.